Operator部署

本章节讲解使用Prometheus Operator部署在在Kubernetes中的具体流程。

| OS | Kernel | Kubernetes | Prometheus-Operator | 部署方式 |

|---|---|---|---|---|

Ubuntu18.04 |

4.15.0-112-generic |

1.17.7 |

0.41.1 |

Operator |

在官方GitHub中,已经提示了 Prometheus Operator 版本 >= 0.39.0 的版本 需要对应 >= 1.16.0的Kubernetes集群版本。我们使用的Kubernetes版本是1.17.7,prometheus-operator是0.41.1。

Kubernetes 集群中 Prometheus Operator 的作用¶

-

使用Kubernetes本地配置选项实现Prometheus Operator的无缝安装。

-

可以快速的在Kubernetes命名空间、特定应用程序中创建和销毁Prometheus实例。

-

可以从本地Kubernetes资源中自定义配置,包括版本,持久性,保留策略和副本。

-

允许使用标签发现目标服务,并基于已知的的Kubernetes标签查询自动生成监控目标配置。

例如: Prometheus Operator 可以在pod/service销毁和返回时自动创建新的配置文件时,无需人工介入。

Operator 涉及的组件¶

-

Custom Resource Definitio (CRD): 创建具有可指定名称和模式的新自定义资源,无需进行任何编码。Kubernetes API服务会处理自定义资源的存储。

-

Custom Resource(CR): 扩展 Kubernetes API或允许将自定义API引入kubernetes集群的资源对象。

-

Custom Controller: 处理内置的Kubernetes对象,比如以新的方式部署、服务,或者像管理本机Kubernetes组件一样管理自定义资源。

-

Operator Pattern: 结合CRD和自定义控制器一起使用。

-

Operator 构建在Kubernetes概念资源和控制器的基础上,它添加了允许 Operator 执行常见应用程序任务的配置。

-

Operator 是为运行Kubernetes应用程序而专门构建的资源,其中包含操作基础库相关信息。

Operator 工作流程¶

Operator 是在后台执行以下活动来管理自定义资源。

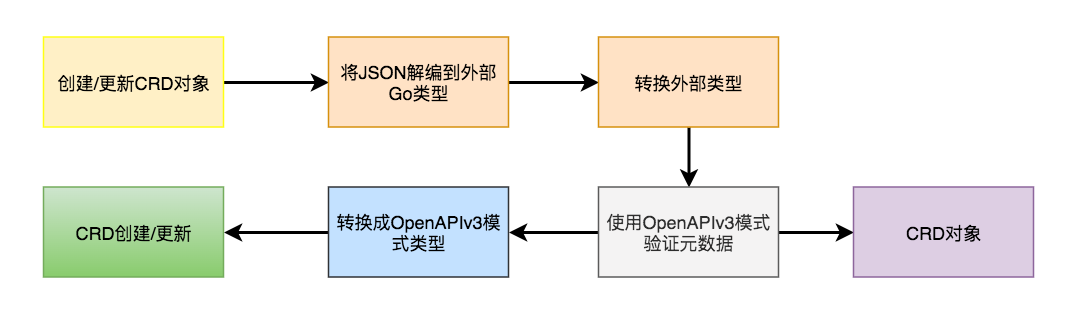

自定义资源定义(CRD)创建 → CRD定义规范和元数据,根据它们创建自定义资源。创建CRD请求时,使用kubernetes内部模式类型(OpenAPI v3模式)验证元数据,然后创建自定义资源定义对象。

自定义资源创建根据元数据和CRD规范验证对象,并相应地创建自定义对象。

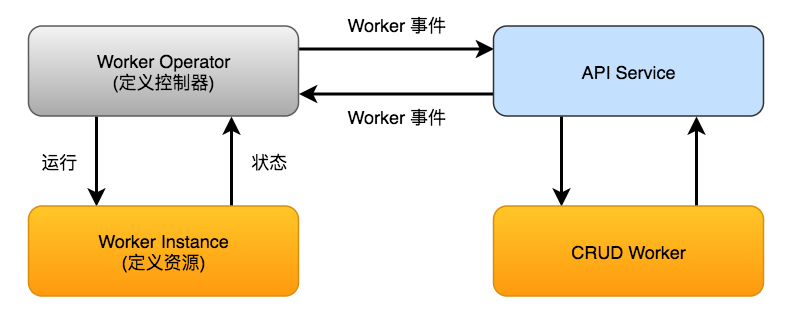

Operator ( Custom Resource ),开始监听事件及其状态的变化,并基于CRD管理自定义资源。它提供对自定义资源执行CRUD操作的事件,因此只要自定义资源的状态发生改变,就会触发相应的事件。

采集目标的服务发现和自动配置¶

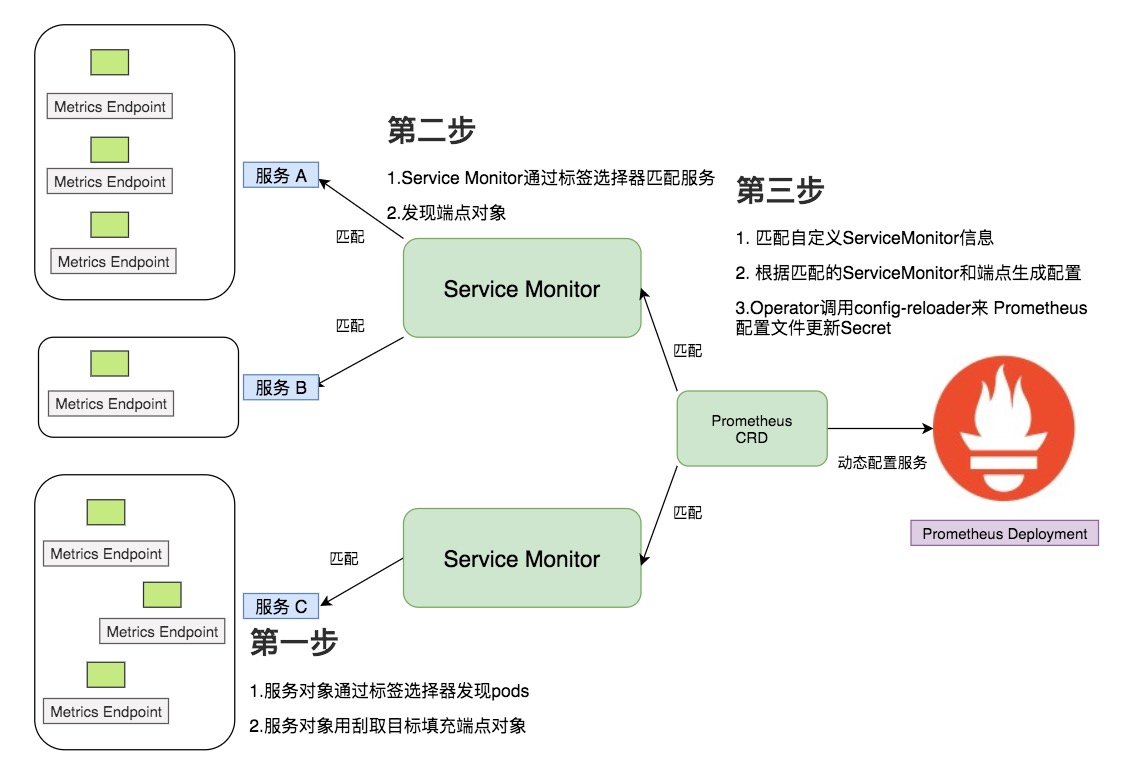

Prometheus Operator 使用Service Monitor CRD来执行采集目标的自动发现和自动配置。

ServiceMonitor 涉及的组件

-

Service: 一组运行Pod的网络服务抽象,用于暴露 Endpoint Port,并使用自定义的标签进行标记。当服务或者Pod关闭时,服务发现会根据定义的标记进行匹配,然后生成相关监控配置信息。

-

ServiceMonitor: 基于标签匹配发现服务的自定义资源。ServiceMonitor应该部署在Prometheus CRD的Namespace中,可以使用namespaceSelector定义发现部署在其他名称空间中的服务。

-

Prometheus CRD: 基于标签匹配服务监控资源定义,并为Prometheus生成相关配置信息。

-

Prometheus Operator 调用config-reloader组件来自动更新配置yaml,其中包含抓取目标细节。

部署Prometheus Operator¶

# 首先克隆仓库prometheus-operator到本地,如果慢的话使用 github.com.cnpmjs.org

git clone https://github.com/prometheus-operator/kube-prometheus.git

cd /data/kube-prometheus/manifests

# 这个是所有的CRD资源

kubectl apply -f /data/kube-prometheus/manifests/setup/

# 这里是关于Prometheus、Alertmanager、Grafana等的deployment、statefulset、svc以及rules的配置文件。

kubectl apply -f /data/kube-prometheus/manifests/查看 monitoring ns 中的Pod部署情况

kubectl -n monitoring get pod

NAME READY STATUS RESTARTS AGE

alertmanager-main-0 2/2 Running 0 4m7s

alertmanager-main-1 2/2 Running 0 4m7s

alertmanager-main-2 2/2 Running 0 4m7s

grafana-85c89999cb-g9ng2 1/1 Running 0 3m58s

kube-state-metrics-79755744fc-f4lws 3/3 Running 0 3m56s

node-exporter-45s2q 2/2 Running 0 3m55s

node-exporter-f4rrw 2/2 Running 0 3m55s

node-exporter-hvtzj 2/2 Running 0 3m55s

node-exporter-nlvfq 2/2 Running 0 3m55s

node-exporter-qbd2q 2/2 Running 0 3m55s

node-exporter-zjrh4 2/2 Running 0 3m55s

prometheus-adapter-b8d458474-9829m 1/1 Running 0 3m51s

prometheus-k8s-0 3/3 Running 1 3m46s

prometheus-k8s-1 3/3 Running 1 3m46s

prometheus-operator-7df597b86b-b852l 2/2 Running 0 6m32s确认pod运行以后,查看APIService的monitor是否部署成功。

kubectl get APIService | grep monitor

v1.monitoring.coreos.com Local True 7m6s

v1beta1.metrics.k8s.io monitoring/prometheus-adapter True 8d查看monitoring.coreos.comAPI ,如果现实不是json格式,可以安装jq用于转换为json格式显示。

kubectl get --raw /apis/monitoring.coreos.com/v1|jq

{

"kind": "APIResourceList",

"apiVersion": "v1",

"groupVersion": "monitoring.coreos.com/v1",

"resources": [

{

"name": "probes",

"singularName": "probe",

"namespaced": true,

"kind": "Probe",

"verbs": [

"delete",

"deletecollection",

"get",

"list",

"patch",

"create",

"update",

"watch"

],

"storageVersionHash": "x4d99qNb5YI="

},

{

"name": "alertmanagers",

"singularName": "alertmanager",

"namespaced": true,

"kind": "Alertmanager",

"verbs": [

"delete",

"deletecollection",

"get",

"list",

"patch",

"create",

"update",

"watch"

],

"storageVersionHash": "NshW3zg1K7o="

},

{

"name": "prometheusrules",

"singularName": "prometheusrule",

"namespaced": true,

"kind": "PrometheusRule",

"verbs": [

"delete",

"deletecollection",

"get",

"list",

"patch",

"create",

"update",

"watch"

],

"storageVersionHash": "RSJ8iG+KDOo="

},

{

"name": "podmonitors",

"singularName": "podmonitor",

"namespaced": true,

"kind": "PodMonitor",

"verbs": [

"delete",

"deletecollection",

"get",

"list",

"patch",

"create",

"update",

"watch"

],

"storageVersionHash": "t6BHpUAzPig="

},

{

"name": "thanosrulers",

"singularName": "thanosruler",

"namespaced": true,

"kind": "ThanosRuler",

"verbs": [

"delete",

"deletecollection",

"get",

"list",

"patch",

"create",

"update",

"watch"

],

"storageVersionHash": "YBxpg/kA6UI="

},

{

"name": "prometheuses",

"singularName": "prometheus",

"namespaced": true,

"kind": "Prometheus",

"verbs": [

"delete",

"deletecollection",

"get",

"list",

"patch",

"create",

"update",

"watch"

],

"storageVersionHash": "C8naPY4eojU="

},

{

"name": "servicemonitors",

"singularName": "servicemonitor",

"namespaced": true,

"kind": "ServiceMonitor",

"verbs": [

"delete",

"deletecollection",

"get",

"list",

"patch",

"create",

"update",

"watch"

],

"storageVersionHash": "JLhPcfa+5xE="

}

]

}查看部署的CRD

kubectl get crd|grep monitoring

NAME CREATED AT

alertmanagers.monitoring.coreos.com 2020-09-04T09:11:22Z

podmonitors.monitoring.coreos.com 2020-09-04T09:11:22Z

probes.monitoring.coreos.com 2020-09-04T09:11:22Z

prometheuses.monitoring.coreos.com 2020-09-04T09:11:23Z

prometheusrules.monitoring.coreos.com 2020-09-04T09:11:24Z

servicemonitors.monitoring.coreos.com 2020-09-04T09:11:24Z

thanosrulers.monitoring.coreos.com 2020-09-04T09:11:25Z| CRD | 描述 |

|---|---|

prometheuses |

定义了的Prometheus的部署配置信息 |

alertmanagers |

定义了的Prometheus的部署配置信息。 |

podmonitors |

以声明式方式指定了应该如何监控pods组。根据API服务器中资源对象的当前状态自动生成Prometheus抓取配置。 |

probes |

以声明方式指定应如何监控进入或静态目标组。根据定义自动生成Prometheus抓取配置 |

prometheusrules |

定义了一组所需要的Prometheus警报Rules或者Recording Rules。 生成一个rules文件,Prometheus实例使用这个rules文件。 |

servicemonitors |

以及声明方式地指定应如何监控Kubernetes service 组,根据API服务器中资源对象的当前状态自动生成Prometheus抓取配置。 |

thanosrulers |

定义了所需要的Thanos Rules信息 |

安装ingress-nginx¶

cat ngress-nginx-svc.yaml

apiVersion: v1

kind: Namespace

metadata:

name: ingress-nginx

---

apiVersion: v1

kind: Service

metadata:

name: ingress-nginx

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

spec:

type: NodePort

ports:

- name: http

port: 80

targetPort: 80

protocol: TCP

- name: https

port: 443

targetPort: 443

protocol: TCP

selector:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginxcat ngress-nginx-mandatory.yaml

apiVersion: v1

kind: Namespace

metadata:

name: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

kind: ConfigMap

apiVersion: v1

metadata:

name: nginx-configuration

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

kind: ConfigMap

apiVersion: v1

metadata:

name: tcp-services

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

kind: ConfigMap

apiVersion: v1

metadata:

name: udp-services

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: nginx-ingress-serviceaccount

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRole

metadata:

name: nginx-ingress-clusterrole

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

rules:

- apiGroups:

- ""

resources:

- configmaps

- endpoints

- nodes

- pods

- secrets

verbs:

- list

- watch

- apiGroups:

- ""

resources:

- nodes

verbs:

- get

- apiGroups:

- ""

resources:

- services

verbs:

- get

- list

- watch

- apiGroups:

- ""

resources:

- events

verbs:

- create

- patch

- apiGroups:

- "extensions"

- "networking.k8s.io"

resources:

- ingresses

verbs:

- get

- list

- watch

- apiGroups:

- "extensions"

- "networking.k8s.io"

resources:

- ingresses/status

verbs:

- update

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: Role

metadata:

name: nginx-ingress-role

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

rules:

- apiGroups:

- ""

resources:

- configmaps

- pods

- secrets

- namespaces

verbs:

- get

- apiGroups:

- ""

resources:

- configmaps

resourceNames:

# Defaults to "<election-id>-<ingress-class>"

# Here: "<ingress-controller-leader>-<nginx>"

# This has to be adapted if you change either parameter

# when launching the nginx-ingress-controller.

- "ingress-controller-leader-nginx"

verbs:

- get

- update

- apiGroups:

- ""

resources:

- configmaps

verbs:

- create

- apiGroups:

- ""

resources:

- endpoints

verbs:

- get

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: RoleBinding

metadata:

name: nginx-ingress-role-nisa-binding

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: nginx-ingress-role

subjects:

- kind: ServiceAccount

name: nginx-ingress-serviceaccount

namespace: ingress-nginx

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding

metadata:

name: nginx-ingress-clusterrole-nisa-binding

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: nginx-ingress-clusterrole

subjects:

- kind: ServiceAccount

name: nginx-ingress-serviceaccount

namespace: ingress-nginx

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-ingress-controller

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

spec:

replicas: 3

selector:

matchLabels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

template:

metadata:

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

annotations:

prometheus.io/port: "10254"

prometheus.io/scrape: "true"

spec:

hostNetwork: true

# wait up to five minutes for the drain of connections

terminationGracePeriodSeconds: 300

serviceAccountName: nginx-ingress-serviceaccount

nodeSelector:

kubernetes.io/os: linux

containers:

- name: nginx-ingress-controller

image: quay.io/kubernetes-ingress-controller/nginx-ingress-controller:0.30.0

args:

- /nginx-ingress-controller

- --configmap=$(POD_NAMESPACE)/nginx-configuration

- --tcp-services-configmap=$(POD_NAMESPACE)/tcp-services

- --udp-services-configmap=$(POD_NAMESPACE)/udp-services

- --publish-service=$(POD_NAMESPACE)/ingress-nginx

- --annotations-prefix=nginx.ingress.kubernetes.io

securityContext:

allowPrivilegeEscalation: true

capabilities:

drop:

- ALL

add:

- NET_BIND_SERVICE

# www-data -> 101

runAsUser: 101

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

ports:

- name: http

containerPort: 80

protocol: TCP

- name: https

containerPort: 443

protocol: TCP

livenessProbe:

failureThreshold: 3

httpGet:

path: /healthz

port: 10254

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 10

readinessProbe:

failureThreshold: 3

httpGet:

path: /healthz

port: 10254

scheme: HTTP

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 10

lifecycle:

preStop:

exec:

command:

- /wait-shutdown

---

apiVersion: v1

kind: LimitRange

metadata:

name: ingress-nginx

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

spec:

limits:

- min:

memory: 90Mi

cpu: 100m

type: Container配置ingress-nginx¶

我们这个时候可以配置ingress-nginx来提供外部访问。

prometheus

cat prometheus-service.yaml

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: prometheus-ingress

namespace: monitoring

annotations:

nginx.ingress.kubernetes.io/affinity: cookie

nginx.ingress.kubernetes.io/session-cookie-name: "prometheus-cookie"

nginx.ingress.kubernetes.io/ssl-redirect: "false"

kubernetes.io/ingress.class: nginx

certmanager.k8s.io/cluster-issuer: "letsencrypt-local"

kubernetes.io/tls-acme: "false"

spec:

rules:

- host: prom.awslabs.cn

http:

paths:

- path: /

backend:

serviceName: prometheus-k8s

servicePort: web

tls:

- hosts:

- prom.awslabs.cngrafana

cat grafana-service.yaml

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: grafana-ingress

namespace: monitoring

annotations:

nginx.ingress.kubernetes.io/affinity: cookie

nginx.ingress.kubernetes.io/session-cookie-name: "grafana-cookie"

nginx.ingress.kubernetes.io/ssl-redirect: "false"

kubernetes.io/ingress.class: nginx

certmanager.k8s.io/cluster-issuer: "letsencrypt-local"

kubernetes.io/tls-acme: "false"

spec:

rules:

- host: grafana.awslabs.cn

http:

paths:

- path: /

backend:

serviceName: grafana

servicePort: http

tls:

- hosts:

- grafana.awslabs.cnalertmanager

cat alertmanager-service.yaml

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: alertmanager-ingress

namespace: monitoring

annotations:

nginx.ingress.kubernetes.io/affinity: cookie

nginx.ingress.kubernetes.io/session-cookie-name: "alert-cookie"

nginx.ingress.kubernetes.io/ssl-redirect: "false"

kubernetes.io/ingress.class: nginx

certmanager.k8s.io/cluster-issuer: "letsencrypt-local"

kubernetes.io/tls-acme: "false"

spec:

rules:

- host: alert.awslabs.cn

http:

paths:

- path: /

backend:

serviceName: alertmanager-main

servicePort: web

tls:

- hosts:

- alert.awslabs.cn

#secretName: alertmanager-tls配置完成后使用内网 dns 或 本地 host 来指定域名访问。

kubectl apply -f prometheus-service.yaml

kubectl apply -f grafana-service.yaml

kubectl apply -f alertmanager-service.yaml添加kube-controller-manager、kube-scheduler监控¶

需要注意下你暴露的协议是https还是http,需要对应的调整下,调整的对应文件是 prometheus-serviceMonitorKubeControllerManager.yaml prometheus-serviceMonitorKubeScheduler.yaml。

cat kube-controller-manager-scheduler.yaml

apiVersion: v1

kind: Service

metadata:

namespace: kube-system

name: kube-controller-manager

labels:

k8s-app: kube-controller-manager

spec:

selector:

component: kube-controller-manager

type: ClusterIP

clusterIP: None

ports:

- name: http-metrics

port: 10252

targetPort: 10252

protocol: TCP

---

apiVersion: v1

kind: Service

metadata:

namespace: kube-system

name: kube-scheduler

labels:

k8s-app: kube-scheduler

spec:

selector:

component: kube-controller-manager

type: ClusterIP

clusterIP: None

ports:

- name: http-metrics

port: 10251

targetPort: 10251

protocol: TCP如果是pod的话,就不需要关心ep的问题,如果不是的话,需要自己查下当前的ep,然后加入里面。

# 加入集群的endpointIP

cat kube-controller-manager-scheduler-endpoint.yaml

apiVersion: v1

kind: Endpoints

metadata:

labels:

k8s-app: kube-controller-manager

name: kube-controller-manager

namespace: kube-system

subsets:

- addresses:

- ip: 192.168.1.151

- ip: 192.168.1.152

- ip: 192.168.1.150

ports:

- name: http-metrics

port: 10252

protocol: TCP

---

apiVersion: v1

kind: Endpoints

metadata:

labels:

k8s-app: kube-scheduler

name: kube-scheduler

namespace: kube-system

subsets:

- addresses:

- ip: 192.168.1.151

- ip: 192.168.1.152

- ip: 192.168.1.150

ports:

- name: http-metrics

port: 10251

protocol: TCP在prom.awslabs.cn中查看下targes,已经有了kube-controller-manager、kube-scheduler的targets信息了。

到此,Prometheus Operator已经部署完了,可以去查看cm、secret、svc、ep、deployment、statefulset中的具体资源信息,根据自己的需求修改。